Key Dates

Openmesh Overview

Openmesh is building a fully decentralized cloud, data, and oracle network that eliminates the need for middlemen and centralized servers. By providing decentralized, permissionless cloud infrastructure, Openmesh ensures that blockchain nodes, Web3, and even Web2 applications can operate seamlessly without the threat of regulatory overreach or centralized control. With Openmesh, trust is embedded in the system itself, validated by a decentralized network of independent, anonymous validators, ensuring immutability and security by design.

Not your cloud, not your data

Vision & Mission

The Openmesh Vision

Openmesh was founded with the bold vision of democratizing access to data and infrastructure in a world increasingly dominated by centralized entities. Our goal is to build the next generation of open, decentralized infrastructure that will shape the future of a permissionless internet, preserve digital democracy, and guarantee free and open information flow for everyone.

The Openmesh Mission

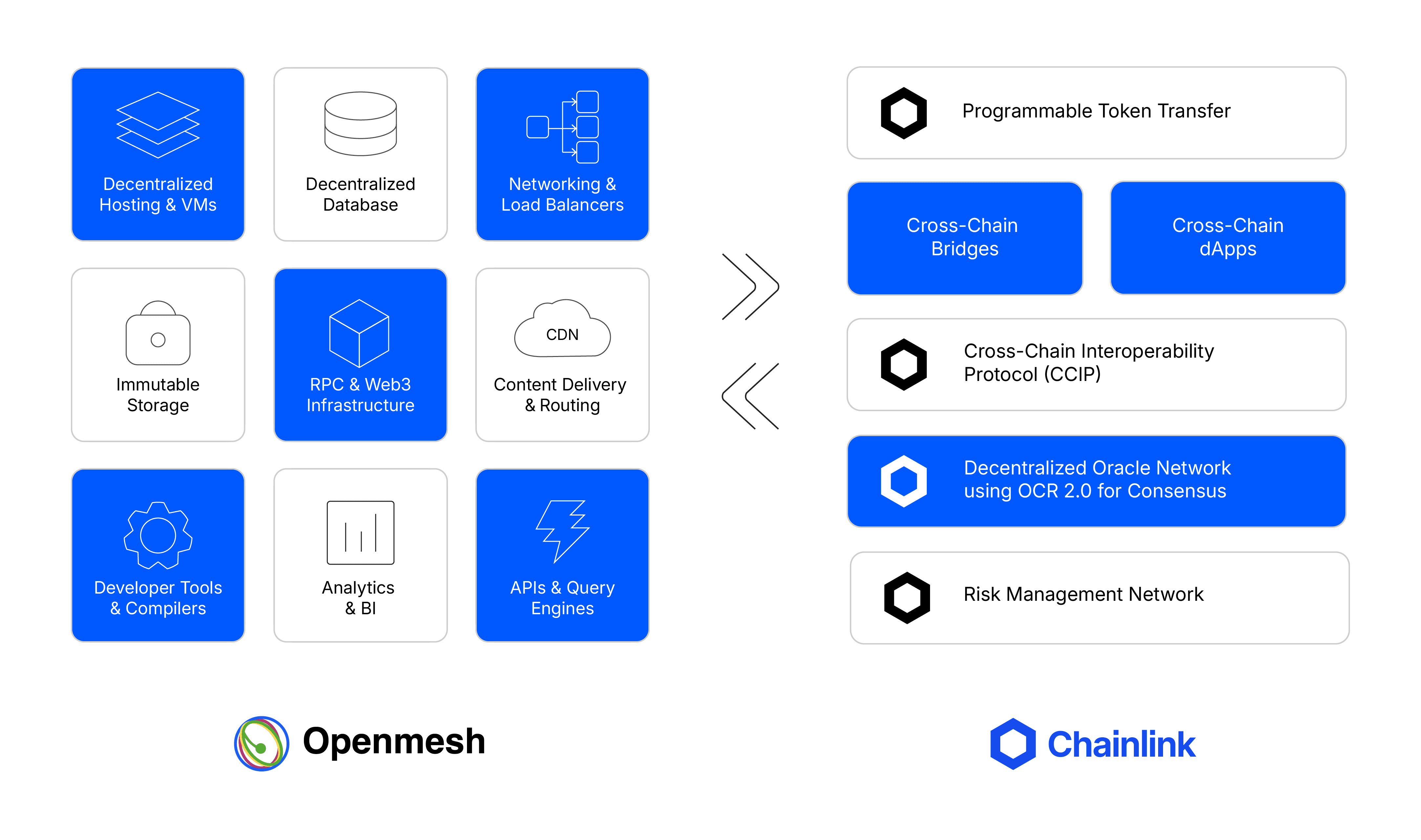

Our mission is to bridge the gap between Web2 and Web3 by accelerating the growth of Web3 through interoperability and the adoption of decentralized infrastructure. We strive to enable a more accessible and resilient digital future by empowering individuals, businesses, and developers to participate in an open internet without restrictions or middlemen.

Core Principles – Openmesh’s 10 Commandments

Decentralized Infrastructure as a Foundation: No single entity can alter the data, services, or network.

Censorship Resistance by Design: Peer-to-peer structure with end-to-end encryption, immutable records, and distributed authority.

Accessibility for All (Democracy and Equality): Open and accessible infrastructure, available to everyone—no KYC, licensing, or location-based restrictions.

Composability & Interoperability by Design: Seamless compatibility with multiple networks, devices, and protocols.

Transparency by Design: All operations, financials—including salaries and infrastructure costs—are fully public and auditable.

Local Interconnectivity: Nodes communicate directly, optimizing data routing and sharing across the network.

Redundancy and Reliability in Network Design: Multiple pathways and nodes ensure continuous accessibility and uptime, eliminating single points of failure.

Self-Healing Systems & Sustainability: Systems automatically adjust, recover, and scale without external intervention.

Continuous Improvements for Scalability: Designed to accommodate exponential growth and support diverse data types with evolving economic and incentive models.

Community Governance: Governed by a community-driven DAO, empowering users and developers to shape the network’s future.

Xnode

Core Principles

Openmesh's 10 Commandments

- Decentralized Infrastructure as a Foundation. No single authority can alter the data, its services & the network

- Censorship Resistance by Design. Peer-to-peer structure, end-to-end encryption, immutable records, distributed authority

- Accessibility for All (Democracy and Equality). Accessible to everyone, anywhere

- Composability & Interoperability by Design. Compatibility with various networks, devices and systems

- Transparency by Design. All operations, and financials, including salaries and infrastructure costs, are public.

- Local Interconnectivity. Direct communication between neighbouring nodes, optimizing data routing & file sharing

- Redundancy and Reliability in Network Design.Multiple data pathways and nodes to ensure continuous data accessibility

- Self-Healing Systems & Sustainability.Automatically adjusting and recovering from changes

- Continuous Improvements for Scalability. Accommodate growth and diverse data types. Economic and Incentive models

- Community Governance. Governed by its community of users, developers

Transparency

- Open-source: All the tech we build is open-source, including applications used for data collection, processing, streaming services, core cloud infrastructure, back-end processes, data normalization, enhancement framework, and indexing algorithms.

- Open Accounting: All the R&D costs and infrastructures, including salaries we pay, are public. We have a real-time display of all the running costs.

- Open R&D: All the project roadmap and real-time R&D progress are publicly available. Anyone can participate or critique.

- Open Infra: All our infrastructure monitoring tools, core usage, and analytics are public. The server usage, real-time data ingestion, computation, and service logs are all public.

- Open Verification: End-to-end data encryption: All data collected and processed by Openmesh stamps cryptographic hashes, including streams, queries, and indexing processes. All the hashes are published into a public ledger for transparency.

Product & Technologies

Innovations

Openmesh is built on the core principles of Web3: decentralization, transparency, immutability, and security, ensuring sovereignty for all users. These values are deeply embedded in Openmesh's design and architecture, making it a truly decentralized solution. Openmesh operates under a Decentralized Autonomous Organization (DAO), not as a private company. This governance model allows for community-driven decision-making and ensures that no single entity has control over the network. Since its inception in late 2020, Openmesh has invested approximately $8.78 million in research and development, entirely bootstrapped by its early founders without any external funds, venture capital, private sales, or ICOs. This self-sustained approach demonstrates our commitment to building a resilient and independent network. The DAO structure not only aligns with our decentralization goals but also fosters a collaborative environment where all stakeholders have a voice in shaping the future of Openmesh.

Core Documentation

API Documentation

Detailed guides and references for all Openmesh APls.

https://docs.openmesh.network/products/openmesh-cloud/openmesh-api

User Guides

Documentation created by the community for the community.

https://github.com/Openmesh-Network

Integration Guides

Instructions for integrating Openmesh with other tools and platforms.

https://docs.openmesh.network/products/integrations

Our Core Princples

Best practices and protocols to ensure the security of your data and operations.

https://www.openmesh.network/litepaper#coreprinciples

Developer Tools

Resources and tools specifically for developers building on Openmesh.

https://github.com/Openmesh-Network

Troubleshooting

Comprehensive guides to help you resolve issues and optimize performance.

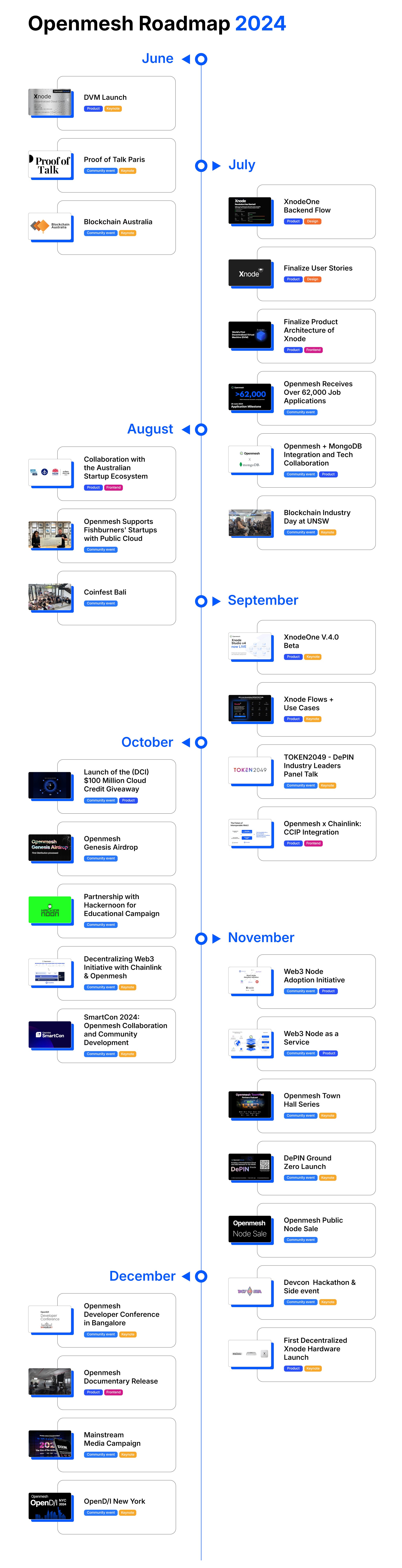

Roadmap

Governance & Transparency

Our Commitment to Governance and Transparency

- Decentralized Autonomous Organization (DAO): The Openmesh network is governed through a community-driven DAO model, where users, developers, and stakeholders participate directly in decision-making.

- Advisory Committees: Specialized advisory committees provide expert guidance on technical, ethical, and strategic matters.

- Strategic Oversight: Our framework ensures that long-term goals align with community values, with mechanisms in place to address risks and unforeseen challenges.

Transparency is at the heart of Openmesh’s philosophy. We are committed to sharing key decisions, progress updates, and financial information with our community to foster trust and collaboration.

Open Decision-Making: All major decisions are discussed and voted on through the DAO. The rationale behind key decisions is communicated openly to promote trust and accountability.

Financial Disclosure: Financial reports are publicly accessible, providing full transparency into our funding sources, expenditures, and financial health.

Regular Updates: We keep our community informed through blogs, progress reports, and community calls, where we share updates on challenges, milestones, and next steps.

Upholding Ethical Standards

- Code of Conduct: All contributors are held to strict ethical standards, ensuring responsible behavior in all activities.

- Whistleblower Policy: We maintain a robust whistleblower policy, protecting individuals who report unethical behavior and ensuring concerns are addressed promptly and transparently.

Infrastructure

Openmesh Core is a crucial piece of software that is responsible for maintaining consensus among different validator nodes in the network. Openmesh-core utilizes the Tendermint consensus protocol, which is a Byzantine Fault Tolerant (BFT) consensus algorithm, to ensure secure and reliable blockchain operations. The specific implementation of Tendermint used by Openmesh-core is CometBFT, written in Go.

Responsibilities of Core

Block Proposal:

Selection of Proposer: The core is responsible for selecting the proposer (validator) for each round in a fair manner, typically using a round-robin or weighted round-robin approach based on stake.

Block Creation: The selected proposer creates a new block with valid transactions and broadcasts it to all validators.

Voting Process:

Pre-vote Collection: The core collects pre-votes from all validators for the proposed block. Each validator votes based on the validity of the block.

Pre-commit Collection: After pre-votes are collected, the core collects pre-commits from validators who have received sufficient pre-votes.

Final Commit: The core ensures that if a block receives more than two-thirds of the pre-commits, it is added to the blockchain.

Transaction Validation:

Transaction Verification: The core verifies the validity of transactions included in the proposed block. This includes checking digital signatures, ensuring no double-spending, and validating any other protocol-specific rules.

State Transition: The core applies valid transactions to the current state, producing the new state that will be agreed upon.

Network Communication:

Message Broadcasting: The core handles the broadcasting of proposal, pre-vote, pre-commit, and commit messages to all validators in the network.

Synchronizing Nodes: The core ensures that all nodes stay synchronized, resolving any discrepancies in state or blockchain history.

Fault Tolerance and Security:

Byzantine Fault Tolerance: The core implements mechanisms to handle up to one-third of nodes being malicious or faulty, maintaining consensus despite these issues.

Punishment and Slashing: The core is responsible for detecting and punishing misbehavior, such as double-signing or other forms of protocol violations, by slashing the stake of malicious validators.

Consensus Finality:

Immediate Finality: The core ensures that once a block is committed, it is final and cannot be reverted, providing security and confidence to users and applications relying on the blockchain.

State Management:

State Updates: The core manages the application of committed transactions to the state, ensuring that all nodes have a consistent view of the blockchain state.

Data Integrity: The core ensures the integrity and correctness of the state data, preventing corruption and inconsistencies.

Protocol Upgrades:

Consensus Upgrades: The core may facilitate upgrades to the consensus protocol, ensuring smooth transitions without disrupting the network.

Feature Implementation: The core implements new features and improvements in the consensus mechanism, enhancing performance, security, and functionality.

Data Validation and Seeding:

Data Submission: At each block each node is assigned a different data source to fetch data from until the next block. The data is than seeded by the core via IPFS and a CID of the data is submitted as a transaction.

Data Seeding: The core is responsible for seeding the Data in the network. The core seeds the data via IPFS.

Decentralized Service Mesh Protocol (DSMP)

Abstract

In active development

The Openmesh Decentralized Service Mesh Protocol (DSMP) is designed to enable decentralized service discovery, communication, and orchestration in the Openmesh network. The protocol allows individuals and organizations to run and manage services (called Service Mesh Workers) on Xnodes—physical or virtualized hardware—while allowing others to consume these services. By fractionalizing service mesh tasks, sharing resources, and enabling decentralized observability, DSMP provides a scalable, resilient, and decentralized alternative to traditional service mesh architectures. This paper outlines the architecture, protocols, consensus mechanisms, and the economic model underlying DSMP.

1. Introduction

Service meshes are a critical component of modern distributed systems, allowing services to communicate, discover, and manage one another. However, traditional service meshes like Istio and Linkerd operate in centralized environments, making them unsuitable for decentralized cloud platforms. The Decentralized Service Mesh Protocol (DSMP) from Openmesh addresses this gap by offering a fully decentralized approach to service communication, resource sharing, and observability.

DSMP allows anyone to run services, known as Service Mesh Workers, on Xnode’s within the Openmesh network, while enabling others to consume these services. Instead of running isolated services or relying on centralized cloud infrastructure, DSMP offers a decentralized environment where service operators can share their resources and offer their services to the network.

2. Architecture of DSMP

2.1 Service Mesh Workers

A Service Mesh Worker is an independent service running on an Xnode. It can be a microservice, a compute task, or an application running on the Openmesh network. Service Mesh Workers can be made public or private:

Public Service Mesh Workers: These are advertised in the network, and anyone can subscribe to use them.

Private Service Mesh Workers: These are limited to specific users or groups.

2.2 Xnodes

Xnode’s are the backbone of the Openmesh network. They represent physical servers, virtual machines, or custom hardware owned by individual users or organizations. Xnodes run services, store data, and participate in the Openmesh consensus mechanisms.

2.3 Decentralized Communication and Resource Sharing

At the core of DSMP is a peer-to-peer (P2P) communication model, where each Xnode can discover, communicate, and share resources with other Xnodes. Through this decentralized communication framework, DSMP enables:

Decentralized Service Discovery: Xnodes can discover available services via the Kademlia Distributed Hash Table (DHT).

Resource Fractionalization: Services do not need to run in isolation; they can utilize shared compute, storage, or networking resources offered by multiple Xnodes.

Service Observability: Service Mesh Workers advertise their usage statistics, allowing for network-wide visibility through the Open Observability Protocol.

2.4 Open Observability Protocol

DSMP integrates an Open Observability Protocol, providing a transparent and decentralized way for Service Mesh Workers to display their metrics:

Subscription Counts: Anyone can see which Service Mesh Workers are the most subscribed to.

Performance Metrics: Uptime, response time, and other service performance data are made publicly available.

Fault Detection: Any faults, errors, or failures are tracked and made visible, enabling automatic recovery and fault-tolerance mechanisms.

3. Protocols Enabling DSMP

3.1 Kademlia Distributed Hash Table (DHT) for Service Discovery

The Kademlia DHT provides a decentralized and efficient way for Xnodes to discover Service Mesh Workers within the network:

Key-Value Store: Each Service Mesh Worker is assigned a unique key in the DHT, mapping to its network location and metadata.

Efficient Lookup: Kademlia enables quick lookups for services, ensuring low-latency discovery of available Service Mesh Workers.

Fault Tolerance: The DHT is fault-tolerant, allowing for service discovery even if some nodes in the network go offline.

3.2 Libp2p for Peer-to-Peer Communication

Libp2p is the foundational networking layer that DSMP uses for peer-to-peer communication between Xnodes. Key features include:

Secure Communication: Provides encrypted communication between nodes to ensure privacy and integrity.

Transport Flexibility: Supports multiple transport protocols (TCP, QUIC, WebRTC) for efficient networking in different environments.

Service Discovery: Works in conjunction with Kademlia DHT to enable dynamic service discovery and task delegation.

3.3 Task Delegation with Resource-Aware Distribution

DSMP uses a Delegated Task with Resource-Aware Distribution model for assigning tasks to Service Mesh Workers:

Resource Awareness: Each Xnode advertises its available compute, memory, and storage resources.

Task Delegation: Tasks are assigned to Xnodes based on their available resources and capacity. Larger tasks are given to nodes that can handle them, while smaller nodes take on smaller, simpler tasks.

Load Balancing: Tasks are distributed dynamically to avoid overloading any single Xnode, ensuring even distribution of network workloads.

4. Consensus Mechanisms in DSMP

4.1 Proof of Stake (PoS)

DSMP uses Proof of Stake (PoS) to ensure the correctness of tasks performed by Service Mesh Workers:

Token Staking: Xnode operators must stake Open tokens to participate in task execution. This ensures accountability, as nodes that fail to perform tasks correctly can lose their staked tokens.

Task Verification: Other nodes in the network verify the correctness of completed tasks. If a node is found to be acting maliciously or failing to perform its task, it is penalized.

4.2 Proof of Resource (PoR)

Proof of Resource (PoR) is used to validate that Xnodes offering their resources can indeed deliver the promised compute or storage capacity:

Resource Verification: Before a task is assigned, the Xnode provides proof that it has the necessary resources (CPU, GPU, storage) to complete the task.

Continuous Proof: Xnodes must provide regular proofs to show that they are maintaining their advertised resources over time.

4.3 Byzantine Fault Tolerance (BFT)

DSMP integrates Byzantine Fault Tolerance (BFT) for task result verification:

Task Redundancy: For critical tasks, multiple nodes may be assigned the same task. BFT ensures that consensus is reached on the correct task result, even if some nodes behave maliciously or fail.

Fault-Tolerant Consensus: BFT allows the network to tolerate faulty or malicious nodes without compromising the accuracy of task results or network stability.

5. Service Mesh Worker Operation

5.1 Fractionalizing Services

DSMP enables fractional service operation, where multiple Xnodes share resources to operate a service:

Compute and Storage Sharing: Instead of dedicating one node to run an entire service, multiple Xnode’s can combine their resources to run the service collaboratively.

Dynamic Resource Pooling: The Openmesh protocol dynamically allocates compute, storage, and networking resources based on service demand. As the number of consumers increases, additional resources are allocated automatically.

5.2 Service Subscription and Commitment

Consumers can subscribe to public Service Mesh Workers and use their services. DSMP incorporates a commitment mechanism to ensure long-term availability:

Subscription Mechanism: Consumers can subscribe to Service Mesh Workers and pay a fee in Open tokens. This commitment can be reflected as either a one-time payment or a recurring subscription.

Staking for Accountability: Service providers can stake Open tokens to signal accountability. The more they stake, the more confidence consumers have in their reliability.

6. Open Observability Protocol

6.1 Transparent Metrics

The Open Observability Protocol in DSMP provides a transparent layer of metrics that are accessible to all nodes:

Service Usage Statistics: Displays the number of consumers subscribed to a Service Mesh Worker and the resource consumption patterns.

Performance Metrics: Uptime, response time, and reliability are continuously monitored and made available for public review.

Fault Reporting: Errors and failures are logged and available for network-wide review, allowing automatic fault detection and recovery.

6.2 Decentralized Monitoring

Monitoring is decentralized, with metrics collected and aggregated by multiple nodes. There is no single point of failure or control, ensuring resilient and reliable monitoring of services in DSMP.

7. Incentive and Economic Model

7.1 Token-Based Payment and Incentive System

DSMP operates on a token-based economy where Service Mesh Workers are compensated in Open tokens for offering their services. Key components include:

Service Fees: Consumers pay Service Mesh Workers in Open tokens based on their resource usage or subscription model.

Staking and Rewards: Nodes can earn additional tokens by staking tokens and performing tasks correctly. Misbehaving nodes lose a portion of their stake as a penalty.

7.2 Resource Leasing

Xnode operators can lease their idle resources to other nodes through the DSMP:

Compute Leasing: Xnodes can lease their unused compute power to other services that need additional capacity.

Storage Leasing: Xnodes with excess storage can lease it to services that require decentralized storage, receiving Open tokens in return.

8. Fault Tolerance and Recovery Mechanisms

8.1 Automatic Task Reallocation

In the event that a Service Mesh Worker becomes unavailable or fails to complete a task, DSMP automatically reallocates the task to another node:

Fault Detection: The Open Observability Protocol continuously monitors service health and identifies failures.

Task Reassignment: Failed tasks are quickly reassigned to healthy Xnodes to ensure seamless service continuity.

8.2 Redundant Service Execution

Critical services can be executed redundantly across multiple Xnodes, ensuring that even if one node fails, the service remains operational:

Byzantine Fault Tolerance ensures that redundant service execution results are verified for consistency.

9. Conclusion and Future Directions

The Openmesh Decentralized Service Mesh Protocol (DSMP) is a robust, scalable, and fully decentralized service management framework. By leveraging decentralized communication protocols like Kademlia DHT and libp2p, and consensus mechanisms like PoS, PoR, and BFT, DSMP ensures efficient, secure, and fault-tolerant service discovery, communication, and task execution.

Tokenomics

Token distribution

Openmesh DAO

Openmesh's DAO governance model is designed to make key decisions such as resource allocation more democratic. This model empowers our core team to vote on critical issues, ensuring that decisions are made transparently and in the best interest of the community.

As this DAO decides who has the Verified Contributor status, which is required to enter a department, it can be seen as "Department Owner".

This is the only responsibility of this DAO, managing the group of verified contributors by adding any new promising candidates and removing those that aren't involved enough, abusing the system, or any other kind of harm is done to the Verified Contributors by keeping them.

Optimistic actions

As adding Verified Contributors is not a very harmful action and it is expected to be done regularly, this can be done optimistically. This means that an existing Verified Contributor creates the action to mint a new NFT to a promising candidate, explaining why they made this choice. All other Verified Contributors will then have 7 days to review this action and in case they do not agree, the opportunity to reject it. Only a single rejection is required to prevent the action from happening.

In case someone creates suspicious actions or is rejecting actions without good reason, this could be a reason to remove their Verified Contributor status. It is recommended to mention it to them beforehand before taking this last resort.

Voting actions

Any other actions will need at least 20% of all Verified Contributors to vote on it and the majority (>50%) to approve it.

The only action that is expected is the burning of NFTs. Any Verified Contributor who knows they will stop interacting with the platform can also burn their NFT themselves, to save the DAO the hassle of voting.

The Verified Contributors are in complete control of this DAO, so they can decide on any other actions they want to perform, such as building up a treasury.

Opencircle

Learning, Networking, Earning, Growing & Influencing Web3

A go-to place to learn, meet people, connect, and apply what you've learned. Find projects to work on and earn for your contributions, regardless of where you live and work, through OpenR&D.

Academy:

OpenCircle Academy is not just about learning; it’s about mastering the intricacies of Web3 technology. From blockchain basics to advanced decentralized finance (DeFi) systems, our curriculum is curated by industry pioneers and updated regularly to reflect the latest trends and technologies. Interactive courses, hands-on projects, and real-time simulations offer you a practical learning experience that goes beyond just theory.

Open R&D:

OpenR&D is proud to be one of the pioneers of R&D platforms in Web3. We are empowering developers, engineers, and DAOs to improve the developer experience and project management through a truly decentralized R&D platform built for scalable innovation in distributed engineering.

OpenR&D

Our vision is to empower Web3 projects and teams to collaborate seamlessly

OpenR&D enables information to flow freely, enabling efficient project management and distribution of work. We are committed to making development and payment more open, transparent, and accessible for everybody.

Addressing pain points in Open Source and DAOs

Open-source communities have a variety of problems when it comes to development and coordination. Challenges exist when holding contributors accountable and adhering to any sort of law. By implementing a DAO structure, core teams are able to assemble and create a structure that enables them to become the final decision-makers for a variety of responsibilities.

However, we need an environment to leverage developer experiences where developers can perform better while maintaining truly decentralized governance. These are issues that we specifically addressed with our R&D development portal. We aim to improve the developer experience while maintaining some control of the development and introduce mechanisms to incentivize proper behavior and developer empowerment. Importantly, we wanted this portal to scale as we do, and not limit our ability to grow or be agile.

Empowering developers and engineers

Empowering developers is crucial to scaling engineering. There are a variety of obstacles within centralized businesses that prohibit developers from owning their work and sharing it. Using a platform like ours, developers will have a more liberating experience that provides access to task lists with complete transparency.

DAO friendly

OpenR&D supports the usage of Decentralized Autonomous Organization (DAO); an entity structure with no central authority which is governed by the community members. Members of the DAO, who own community tokens, can vote on initiatives for the entity. The smart contracts and code governing the DAO's operations is publicly disclosed and available for everyone to see.

Community

We are committed to quality technical and academic research on modern data architecture, data mesh, scalable consensus algorithms, and everything open-source!

Openmesh Network Summary

Openmesh partners

Primary resource allocation

Openmesh Expansion Program (OEP) 2024 Participation

Participation Logistics

1. Sponsors Meetup

Selected sponsors will meet the Openmesh team to discuss the roadmap and understand how their contributions will accelerate Openmesh's expansion. This event will also serve as an opportunity for in-depth discussions and clarifications regarding the sponsorship process.

2. Payment and Confirmation Phase (Sep 01st - September 26th)

Whitelist and payment confirmation.

3. Post-Participation and Minting Phase (Sep 26th, 2024 - Sep 26th, 2025)

Sponsors will receive Cloud Credits, redeemable as Xnodes, which can be utilized to support the Decentralized Cloud Initiative (DCI) and other Openmesh services, or converted into sOPEN or OPEN tokens. As early backers, sponsors may also receive governance tokens as a token of appreciation for their support. These tokens will enable sponsors to contribute to the network's governance, particularly in roles such as network operators, Verified Contributors, and Openmesh Verified Resource Providers.